Artificial intelligence is powering more of our daily operations than ever before—from virtual assistants and recommendation engines to fraud detection and autonomous vehicles.

But what happens when these intelligent systems suddenly stop working or start making bad decisions?

Unlike traditional software, AI operates with a level of autonomy and complexity that makes failures harder to predict—and harder to fix. Whether it’s a data issue, a model glitch, or a system outage, the ripple effects can be wide-reaching and costly.

In this article, we’ll unpack what it means when an AI system goes down, the kinds of real-world problems it can create, and how organizations can prepare for the unexpected. Because in a world driven by algorithms, even a small error can lead to major disruption.

Why AI Systems Fail: Top Causes You Shouldn’t Ignore

Artificial Intelligence promises speed, scale, and smart automation—but it doesn’t always deliver. AI system failures are more common than most organizations expect, and they often stem from a handful of recurring issues.

Understanding these failure points is critical if you want to avoid expensive mistakes, reputational damage, or ethical missteps.

Here are the top reasons AI systems break down:

1. Unreliable or Incomplete Data

At the core of every AI model is data. But when that data is:

- Noisy or inaccurate

- Missing important fields

- Out of date

- Or unbalanced in representation, you end up with a system that can’t make good decisions.

Poor data quality is one of the leading reasons AI models fail in the real world.

2. Hidden Bias in the Data or Model

AI systems reflect the data they’re trained on. If the training data contains biased patterns—whether due to historical inequalities or sampling gaps—the AI will replicate those same flaws. This leads to:

- Discriminatory results

- Reduced fairness

- Legal and ethical liabilities

Bias is one of the hardest issues to detect and correct, especially after deployment.

3. Unclear Problem Definition

You can’t hit a target you can’t see. AI projects often struggle because no one defines what success looks like. Without a sharp focus, the model may:

- Optimize the wrong metric

- Miss key variables

- Deliver results that don’t serve the business goal.

A well-scoped objective is a foundation, not a formality.

4. Overfitting and Underfitting

Training a model is a balancing act. If your model becomes too specialized to its training data (overfitting), it won’t perform well with new inputs.

If it’s too simplistic (underfitting), it won’t capture the complexity of the task. Both lead to disappointing performance and unstable outputs.

5. Neglecting Model Updates

AI isn’t a “set-it-and-forget-it” solution. As conditions change—consumer behavior, external trends, or internal processes—models can become outdated.

Known as model drift, this gradual misalignment causes accuracy to slip. Regular monitoring and retraining are essential to keep performance high.

6. Deployment and Integration Failures

Moving from development to production isn’t always seamless. Technical issues often arise during deployment, including:

- Infrastructure mismatches

- Integration bugs

- Latency problems

- Scaling failures.

AI needs to work in the wild, not just in lab conditions.

7. Lack of Interpretability

When AI systems produce results no one can explain, trust breaks down. This is especially critical in regulated industries like healthcare, finance, and legal tech.

If stakeholders can’t understand why a model made a decision, it becomes impossible to:

- Justify outcomes

- Troubleshoot issues

- Ensure compliance

8. Failure to Anticipate Edge Cases

AI models are often trained on average scenarios. But life is full of outliers. When rare but important situations occur, unprepared models can behave unpredictably—or dangerously.

For example, an AI driving system might handle highways flawlessly but misinterpret unusual urban layouts.

9. Security Weaknesses and Adversarial Risks

AI can be manipulated. Hackers and bad actors can craft malicious inputs to deceive models—a method known as adversarial attack. These attacks can cause:

- Misclassification

- Data breaches

- Misleading outputs

AI security is still an emerging discipline, and underestimating this threat can be costly.

10. Misalignment Within the Organization

Not all AI issues are technical. Many failures start with miscommunication between data teams, business units, and executives. Without alignment, AI projects may:

- Chase the wrong goals

- Burn through budget without ROI

- Stall due to lack of support

Success requires not just smart code—but smart collaboration.

What Happens When AI Fails? Real-World Consequences You Can’t Ignore

AI is embedded in everything—from our mobile apps to mission-critical enterprise systems. But when AI systems break, malfunction, or behave unpredictably, the consequences can be serious—and sometimes devastating.

These are not just backend technical glitches; they can impact people’s lives, a company’s bottom line, and public trust.

Here’s what can happen when AI systems fail in the real world:

1. Revenue Losses and Operational Chaos

AI often runs the behind-the-scenes engines of modern businesses—handling logistics, pricing, fraud detection, and more. A sudden failure can cause:

- Missed transactions

- Supply chain delays

- Faulty billing

- Inaccurate analytics

Even a short disruption can translate into significant financial losses, especially in high-volume industries like e-commerce or banking.

2. Frustrated Customers and Damaged Loyalty

When AI-driven tools like chatbots, recommendation engines, or personalized search go offline or produce incorrect outputs, the customer feels it immediately. This can lead to:

- Poor service experiences

- Confusing product suggestions

- Abandoned purchases

- Declining retention rates

People expect smart systems to work seamlessly. When they don’t, customers may simply walk away—and not come back.

3. Human Safety Put at Risk

In sectors where AI directly influences physical environments—like medicine, transportation, or emergency response—a failure can endanger human lives. Consider:

- Incorrect patient treatment from AI-assisted diagnostics

- Malfunctions in autonomous vehicles or drones

- AI-driven systems misrouting emergency responders

The higher the stakes, the greater the risk when AI makes the wrong call.

4. Brand Reputation on the Line

Public-facing AI errors, especially those involving bias, offensive content, or erratic behavior, can go viral in hours. This can cause:

- Embarrassing media headlines

- Social media backlash

- Customer boycotts

- Loss of market trust

A single AI mistake can undo years of brand-building overnight.

5. Regulatory Penalties and Legal Action

As governments tighten the rules around AI, compliance failures can result in:

- Regulatory investigations

- Class-action lawsuits

- Hefty fines

- Mandatory system shutdowns

For example, if an AI system violates GDPR, misuses personal data, or delivers discriminatory outcomes, the legal fallout can be swift and expensive.

6. Security Breaches and Exploits

When AI fails to detect threats—or worse, becomes a target—security gaps widen. AI systems are susceptible to:

- Adversarial attacks

- Data poisoning

- Manipulated inputs designed to deceive models

A compromised AI system can leak sensitive data, expose vulnerabilities, or be weaponized against its own users.

7. Losing the Competitive Edge

Many businesses implement AI to gain speed and intelligence that competitors don’t have. But a system failure can:

- Delay product rollouts

- Undermine AI-driven features

- Force a return to manual workflows

This not only slows progress but can allow rivals to seize market share while you’re stuck fixing problems.

8. Growing Distrust in Automation

Repeated AI failures erode confidence in the technology. The fallout includes:

- Resistance from internal teams

- Customer hesitation around AI-based products

- Reduced willingness to invest in further automation

Over time, this creates friction in digital transformation efforts and slows innovation.

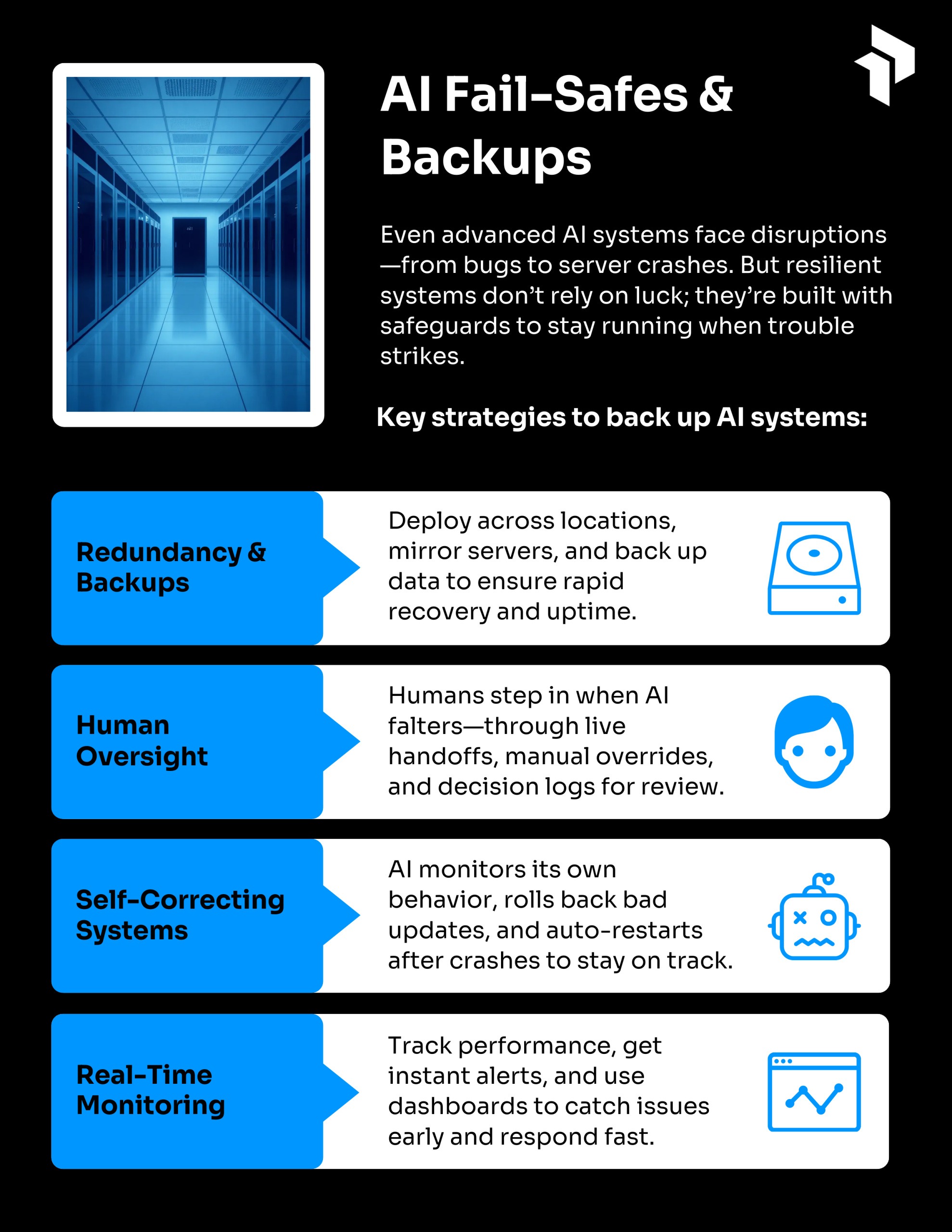

Fail-Safes and Backup Strategies

Even the most advanced AI systems aren’t immune to disruptions. From unexpected bugs to server crashes, things can—and do—go wrong.

But well-designed systems don’t just rely on hope. They’re built with layers of protection that keep operations running, even when trouble hits. Here’s how.

1. Built-In Redundancy and Reliable Backups

One of the most effective ways to prepare for AI system failure is redundancy—essentially having a Plan B (and sometimes a Plan C).

- Multi-location cloud hosting: Deploying AI models across multiple data centers or cloud providers ensures one region’s outage won’t take everything down.

- Mirror servers: Backup systems that can instantly replace a failed one without users noticing.

- Ongoing data backups: Critical information is regularly copied and stored securely to support rapid recovery.

Scenario: If an AI assistant managing customer queries crashes, a redundant system can immediately take over, keeping response times smooth and uninterrupted.

2. Human Oversight as a Safety Net

No matter how autonomous an AI is, humans often serve as the final line of defense when problems arise.

- Live handoff to human agents: In customer support or other user-facing services, humans can jump in when the AI encounters confusion or technical hiccups.

- Manual intervention tools: For sensitive use cases—like medical diagnostics or automated trading—experts can override the AI’s decisions when needed.

- Logged decision histories: Human reviewers can investigate how and why an AI system made a particular call.

Why this matters: Human-in-the-loop designs add a critical layer of safety, especially where mistakes could be costly or dangerous.

3. Smart Systems That Can Detect and Fix Problems

Some AI platforms are built to recognize when they’re going off track—and take corrective action automatically.

- Behavior monitoring: The system watches for unexpected outputs or a sudden drop in accuracy and triggers alerts or recovery protocols.

- Version control and rollbacks: If a newly deployed model version causes issues, the system can switch back to a previously stable release.

- Self-restarting services: Containerized environments like Docker, managed through orchestration tools like Kubernetes, allow services to restart automatically after crashes.

Advantage: These features prevent minor glitches from turning into major outages.

4. Always-On Monitoring and Instant Alerts

Constant visibility into an AI system’s performance is key to catching problems early.

- Performance tracking tools: Metrics like processing speed, error rates, and model drift are monitored in real time.

- Custom alert systems: If something breaks or lags, teams are notified via their preferred channels—Slack, SMS, or email.

- Insightful dashboards: Centralized interfaces show the health of AI systems at a glance, helping teams act quickly.

Pro tip: Monitoring doesn’t just catch failures—it also helps identify long-term trends that could lead to issues later.

Choosing the Right AI Vendor: Essential Questions to Ask Before You Buy

The AI solutions market is crowded, fast-moving, and filled with bold claims. But not all vendors are built the same—and not all systems are ready for the real world.

If you’re planning to invest in artificial intelligence for your business, due diligence is non-negotiable.

Before you make a commitment, here are the smart questions you should ask every AI vendor to avoid surprises and ensure their solution truly meets your needs.

1. Where does your training data come from?

AI is only as good as the data it learns from. Start by asking:

- Is the data proprietary or publicly sourced?

- How relevant is it to my industry and use case?

- Was the dataset vetted for bias or gaps?

If the model wasn’t trained on data similar to your environment, it may struggle to deliver accurate results.

2. How do you protect user data and ensure compliance?

AI systems often process sensitive information, so it’s critical to know:

- How is data stored, transmitted, and anonymized?

- What security measures are in place?

- Is your platform compliant with regulations like GDPR, CCPA, or HIPAA?

A credible vendor should offer detailed documentation on data handling practices.

3. Can we understand and explain the AI’s decisions?

AI shouldn’t feel like a black box. You’ll want to know:

- Can outputs be traced and explained?

- Is there transparency in how predictions are made?

- Can business users make sense of the results without deep technical knowledge?

Explainability is especially vital if the AI influences high-stakes decisions.

4. What happens when the model starts to drift?

Over time, models can become outdated or misaligned with new data trends. Ask:

- How is model performance monitored?

- Who’s responsible for maintenance and updates?

- How often do you retrain the system?

Without a clear plan for model upkeep, performance will degrade—and fast.

5. Will your AI integrate with our existing tools and workflows?

AI should enhance your systems, not force a rebuild. Be sure to ask:

- Does the platform offer APIs or plug-ins?

- Can it work with our CRM, cloud environment, or data warehouse?

- How easily can it be scaled?

Inflexible systems lead to costly customization down the road.

6. What measures are in place to prevent bias in outputs?

Unchecked bias can lead to unfair outcomes and reputational risk. Be sure to ask:

- How is fairness monitored during training and deployment?

- Do you test the model across different demographic groups?

- Are there controls to flag and review questionable outputs?

Responsible AI should have fairness baked into the development process—not treated as an afterthought.

7. What support do we get during and after deployment?

The real test begins once the system goes live. Get clarity on:

- What kind of onboarding is offered?

- Is live support available? What’s the response time?

- Will we have access to ongoing training and product updates?

A good vendor acts like a long-term partner—not just a product vendor.

8. Do you have proven results with companies like ours?

Before you trust a vendor’s promises, look for proof. Ask:

- Can you show us case studies relevant to our industry?

- Do you have references we can speak to?

- What measurable impact did your AI solution have?

If they can’t demonstrate real-world success, consider it a red flag.

9. How transparent is your pricing?

AI costs can escalate if the pricing model isn’t clear. Get specifics on:

- What’s included in the base fee?

- Are there extra charges for training, APIs, or user licenses?

- How will costs change if we scale up?

Make sure there are no hidden fees or surprise charges later on.

Leave a Reply